The current development situation in the technological world is to free developers from tedious application configuration and management, use container images to handle complex program operation-dependent library requirements, and ensure the consistency of the code operating environment. Since the benefits of this are so clear, why doesn’t the infrastructure environment in the enterprise switch to container clusters? The key issue is still a risk. New technology means the lack of untested technology and practical experience, which will bring many unpredictable risks. When the operation and maintenance team of an enterprise maintains a flexible container cluster, the traditional software deployment method needs to be migrated to the container. In this process, there needs to be risk prediction and avoidance. Docker and Rancher provide solutions to these risk problems. For example, based on Rancher's Catalog function, some basic software deployments (such as ElasticSearch) can be quickly completed. In terms of risk management, we can look at the five key points of running an elastic cluster based on Docker and Rancher:

- Run Rancher high availability mode (this article will introduce)

- Use load balancing service in Rancher

- Service monitoring and monitoring inspection in Rancher

- Developers customize the installation and deployment of Rancher

- Discuss the use of Convoy to manage data

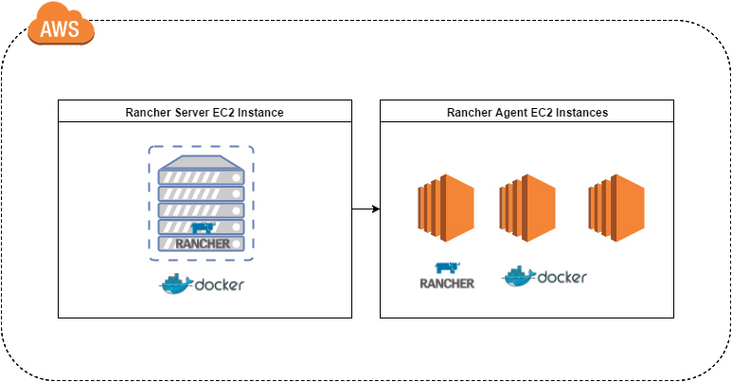

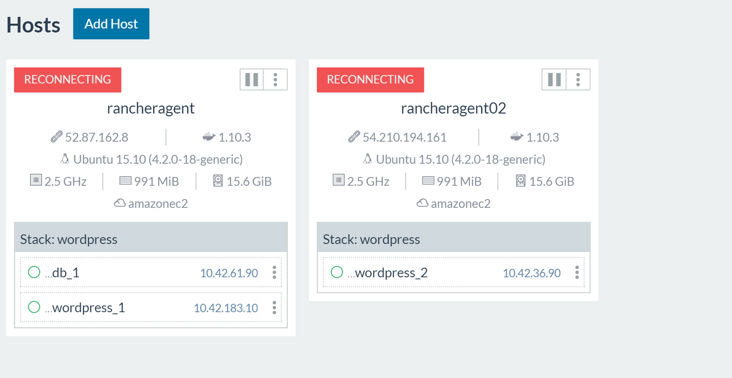

I originally wanted to show how to deploy Rancher Server with an old laptop, and then use docker-machine to join several Raspberry Pi nodes to deploy Rancher Agent, so as to build a Rancher cluster. But this will have some problems, that is, most Docker images are built on Intel CPU, which will be very incompatible with the Raspberry Pi’s ARM CPU. So I will honestly use AWS virtual machine to build a Rancher cluster. For the initial installation, we temporarily deploy a Rancher Server and a Rancher Agent and then deploy a simple multi-instance application.  The above picture shows the settings of my entire cluster. I chose AWS because I am personally familiar with it. Of course, you can choose the cloud provider you are good at.

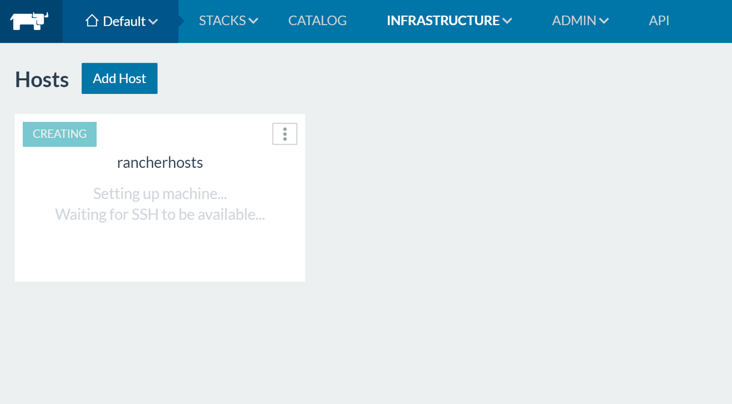

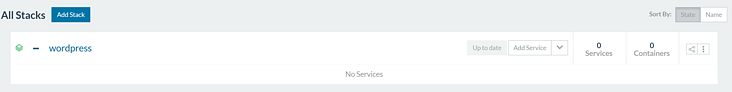

The above picture shows the settings of my entire cluster. I chose AWS because I am personally familiar with it. Of course, you can choose the cloud provider you are good at.  We can try to create a WordPress and check if Rancher is deployed correctly.

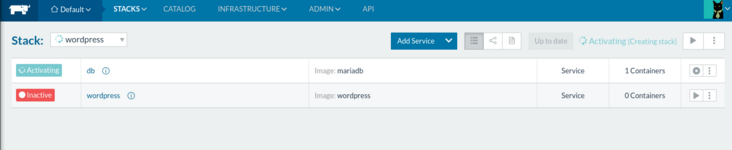

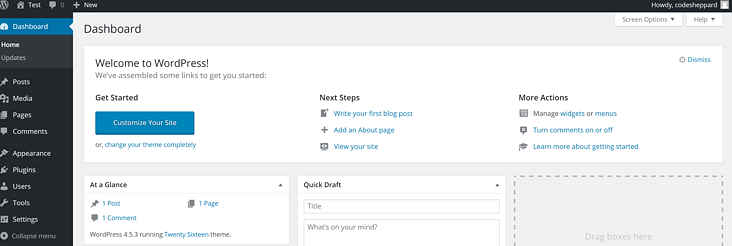

We can try to create a WordPress and check if Rancher is deployed correctly.  In this way, our application is up and running. Just imagine, if the server where the Rancher Server is located fails, or there is a network interruption, what impact will it have on the application? Can WordPress continue to accept requests?

In this way, our application is up and running. Just imagine, if the server where the Rancher Server is located fails, or there is a network interruption, what impact will it have on the application? Can WordPress continue to accept requests?  In order to confirm our doubt, I will follow the steps below, and then record the results of the operation:

In order to confirm our doubt, I will follow the steps below, and then record the results of the operation:

- Block the network between Rancher Server and Rancher Agent

- Stop the container of Rancher Server

- Take a look at what is in the container of the Rancher Server

In the end, if we want to solve these problems, we have to look at how Rancher HA can solve these risks.

Block the network between Rancher Server and Rancher Agent

Enter AWS, and then watch my various sharp operations:

- Block access between Rancher Server and Rancher Agent

- Record what happened

- Kill a few instances of WordPress

- Restore the original network

Observation results

First of all, Rancher Host appeared in the reconnecting state not long after the network was blocked.  At this point, I can still access the WordPress address normally, the service is not interrupted, the IPSec tunnel network is still stored, and the WordPress instance can still access the database normally. Now we are going to stop an instance of WordPress and see what happens? Because we can no longer manage these containers from the Rancher UI, let's execute the docker command on the corresponding host.

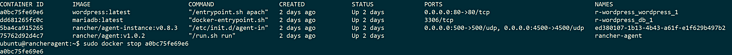

At this point, I can still access the WordPress address normally, the service is not interrupted, the IPSec tunnel network is still stored, and the WordPress instance can still access the database normally. Now we are going to stop an instance of WordPress and see what happens? Because we can no longer manage these containers from the Rancher UI, let's execute the docker command on the corresponding host.  It is a pity that the WordPress container did not restart, which is a bit troublesome. Let’s get Rancher Server back and see if it can sense that one of the WordPress containers has been stopped. After various sharp operations and waiting, Rancher Server and Agent reconnected, and the WordPress container was restarted. It’s perfect, right? So we can see that Rancher Server can still handle intermittent network problems and can realize the failure reconnection of Rancher Server and Agent. But if the impact of network interruption on Rancher is to be further reduced, it is more important to deploy multiple Rancher Servers. Let’s go back and see what happens after the Rancher Server container stops according to the previous plan. Will we lose the ability to manage these Rancher Hosts? See how!

It is a pity that the WordPress container did not restart, which is a bit troublesome. Let’s get Rancher Server back and see if it can sense that one of the WordPress containers has been stopped. After various sharp operations and waiting, Rancher Server and Agent reconnected, and the WordPress container was restarted. It’s perfect, right? So we can see that Rancher Server can still handle intermittent network problems and can realize the failure reconnection of Rancher Server and Agent. But if the impact of network interruption on Rancher is to be further reduced, it is more important to deploy multiple Rancher Servers. Let’s go back and see what happens after the Rancher Server container stops according to the previous plan. Will we lose the ability to manage these Rancher Hosts? See how!

Stop Rancher Server

This time I need to log in to the host of Rancher Server to manually stop Rancher Server. In fact, Rancher Server generally sets -restart=always, so I have a certain recovery ability. But we can assume that Rancher Server really can't get up after the disk is full.

Observation results

Implementdocker stop rancher-serverAfter that, WordPress can still work normally, but Rancher UI can't be accessed anymore. This is not possible. You have to get Rancher Server back quickly. Re-executedocker start rancher-serverAfter Rancher Server started, everything returned to normal again. This is cool. What kind of magic is this? Hurry up, analyze it numbly!

Uncover the mystery of Rancher Server

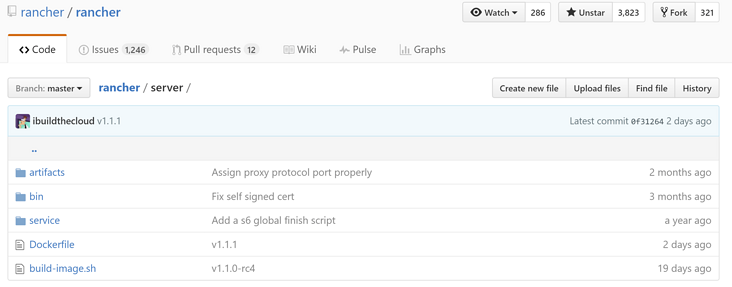

Let's take a brief exploration tour of Rancher Server, take a look at the Dockerfile of Rancher Server.

# Dockerfile contents

FROM ...

...

...

CMD ["/usr/bin/s6-svscan", "/service"]

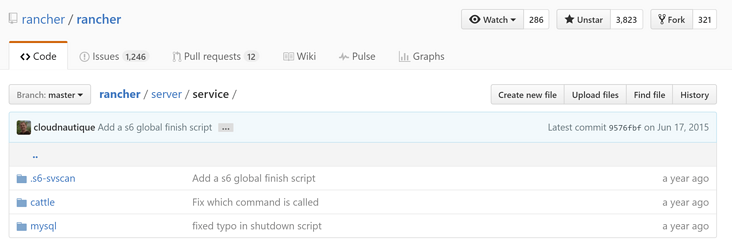

We can see that s6-svscan is used, which can run the program in the specified directory structure. The main files in the directory are Run, Down, and Finish. In the picture below, you can see that two services are running, cattle (Rancher’s core scheduler) and MySQL.  In fact, we can also see what services are launched inside the container.

In fact, we can also see what services are launched inside the container.

PID TTY STAT TIME COMMAND

1 ? Ss 0:00 /usr/bin/s6-svscan /service

7 ? S 0:00 s6-supervise cattle

8 ? S 0:00 s6-supervise mysql

9 ? Ssl 0:57 java -Xms128m -Xmx1g -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/cattle/logs -Dlogback.bootstrap.level=WARN -cp /usr/share/cattle/1792f92ccdd6495127a28e16a685da7

135 ? Sl 0:01 websocket-proxy

141 ? Sl 0:00 rancher-catalog-service -catalogUrl library=https://github.com/rancher/rancher-catalog.git,community=https://github.com/rancher/community-catalog.git -refreshInterval 300

142 ? Sl 0:00 rancher-compose-executor

143 ? Sl 0:00 go-machine-service

1517 ? Ss 0:00 bash

1537 ? R+ 0:00 ps x

We can see the brain of Rancher, a Java application called Cattle, which requires a MySQL to store the corresponding data. This is really convenient, but we found that there will be a single point of failure, and all data is stored in a single point of MySQL. What happens if some impolite operations are performed on the data in MySQL? Destroy MySQL storage data Enter the container to execute some MySQL commands, let’s do something bad together:

docker exec -it rancher-server bash

$ > mysql

mysql> use cattle;

mysql> SET FOREIGN\_KEY\_CHECKS = 0;

mysql> truncate service;

mysql> truncate network;

The result is conceivable. Rancher doesn't remember anything. If you delete a WordPress container, it won't be restored.  And I also deleted the data in the network table, and WordPress doesn’t know how to find its MySQL service anymore.

And I also deleted the data in the network table, and WordPress doesn’t know how to find its MySQL service anymore.  Obviously, if we want to run Rancher in a production environment, we need a way to protect the data of the Rancher Server. In that case, let's talk about Rancher HA.

Obviously, if we want to run Rancher in a production environment, we need a way to protect the data of the Rancher Server. In that case, let's talk about Rancher HA.

Rancher HA installation process

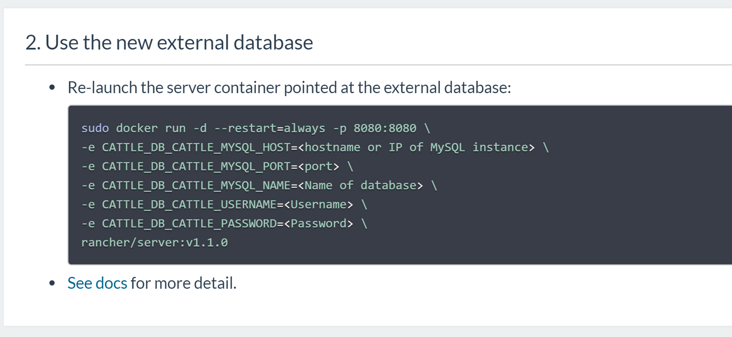

First of all, we must ensure data security. AWS RDS is a good choice, since RDS can provide a reliable MySQL service, and data security can be guaranteed. Of course, you can also use others, I'm just more familiar with RDS. Let's continue the installation process of Rancher HA:  According to our previous agreement, I created a MySQL instance of RDS, and then used it as an external database to connect to Rancher Server.

According to our previous agreement, I created a MySQL instance of RDS, and then used it as an external database to connect to Rancher Server.

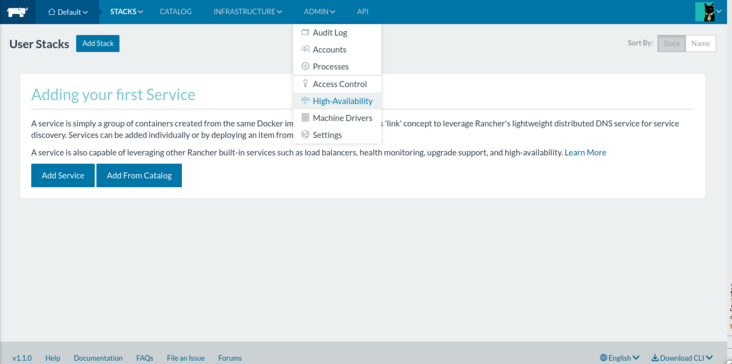

Once we use the external database mode, two options will open on the UI to set up HA.

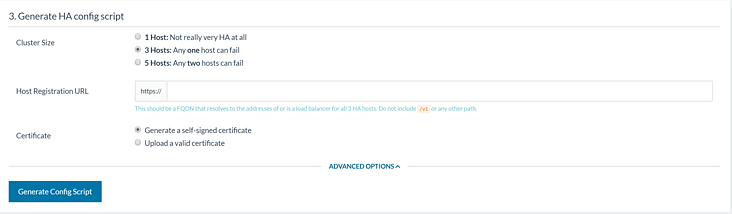

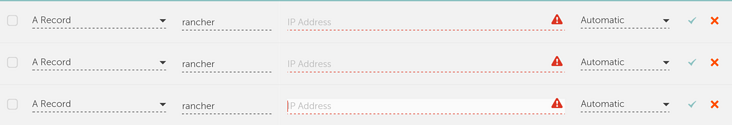

Once we use the external database mode, two options will open on the UI to set up HA.  what to do! Choosing difficulty! It doesn't matter, let me explain the meaning of each option. Cluster size, Pay attention to why are all odd numbers here? Because in the backend, Rancher will set up a ZooKeeper Quorum to ensure lock synchronization. ZooKeeper recommends odd-numbered clusters because even-numbered nodes cannot provide additional fault tolerance. Let’s choose 3 Hosts here, which is a balance between usability and ease of use. Host registration URL Here is to fill in the FQDN of a Rancher Cluster entry, it is recommended to use an external load balancing service or DNS service (round-robin strategy). For example, in the figure, I use a DNS service that supports SRV records, and load balancing of three Rancher Servers through DNS:

what to do! Choosing difficulty! It doesn't matter, let me explain the meaning of each option. Cluster size, Pay attention to why are all odd numbers here? Because in the backend, Rancher will set up a ZooKeeper Quorum to ensure lock synchronization. ZooKeeper recommends odd-numbered clusters because even-numbered nodes cannot provide additional fault tolerance. Let’s choose 3 Hosts here, which is a balance between usability and ease of use. Host registration URL Here is to fill in the FQDN of a Rancher Cluster entry, it is recommended to use an external load balancing service or DNS service (round-robin strategy). For example, in the figure, I use a DNS service that supports SRV records, and load balancing of three Rancher Servers through DNS:  SSL certificates is the last option in the list. If you have an SSL certificate for the domain name, you can configure it here, otherwise, Rancher will automatically generate a self-signed certificate. After everything is filled out, a rancher-ha.sh script will be provided to you to download. With the script, it can be executed on each Rancher Server node. Before execution, you need to note that only docker v1.10.3 is currently supported. To install the specified version of Docker Engine, you can refer to the following method:

SSL certificates is the last option in the list. If you have an SSL certificate for the domain name, you can configure it here, otherwise, Rancher will automatically generate a self-signed certificate. After everything is filled out, a rancher-ha.sh script will be provided to you to download. With the script, it can be executed on each Rancher Server node. Before execution, you need to note that only docker v1.10.3 is currently supported. To install the specified version of Docker Engine, you can refer to the following method:

#!/bin/bash

apt-get install -y -q apt-transport-https ca-certificates

apt-key adv --keyserver hkp://p80.pool.sks-keyservers.net:80 --recv-keys 58118E89F3A912897C070ADBF76221572C52609D

echo "deb https://apt.dockerproject.org/repo ubuntu-trusty main" > /etc/apt/sources.list.d/docker.list

apt-get update

apt-get install -y -q docker-engine=1.10.3-0~trusty

# run the command below to show all available versions

# apt-cache showpkg docker-engine

After Docker is installed, you need to confirm that the ports are open. You can see which ports need to be opened here. However, if it is the first installation, I suggest that you open all the ports so that the pit is too deep to be buried alive. After everything is ready, you can execute the script, and you can see this output after execution:

...

ed5d8e75b7be: Pull complete

ed5d8e75b7be: Pull complete

7ebc9fcbf163: Pull complete

7ebc9fcbf163: Pull complete

ffe47ea37862: Pull complete

ffe47ea37862: Pull complete

b320962f9dbe: Pull complete

b320962f9dbe: Pull complete

Digest: sha256:aff7c52e52a80188729c860736332ef8c00d028a88ee0eac24c85015cb0e26a7

Status: Downloaded newer image for rancher/server:latest

Started container rancher-ha c41f0fb7c356a242c7fbdd61d196095c358e7ca84b19a66ea33416ef77d98511

Run the below to see the logs

docker logs -f rancher-ha

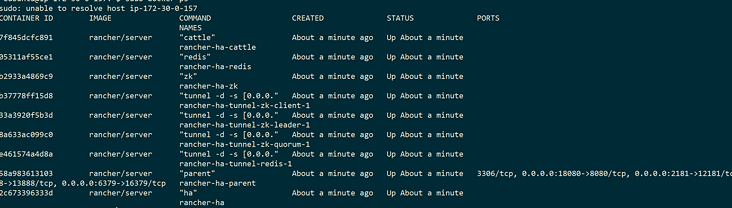

Some additional images will be downloaded during execution. After all, do you need to support HA features? In addition, the Host’s memory is recommended to be at least 4G. After execution, you can see what has been started through docker ps:

Common problems and solutions

Normally, we can see in the log that each member is added to the Rancher HA Cluster:

time="2016-07-22T04:13:22Z" level=info msg="Cluster changed, index=0, members=[172.30.0.209, 172.30.0.111, ]" component=service

...

time="2016-07-22T04:13:34Z" level=info msg="Cluster changed, index=3, members=[172.30.0.209, 172.30.0.111, 172.30.0.69]" component=service

But sometimes there will be accidents, such as some error messages:

time="2016-07-23T14:37:02Z" level=info msg="Waiting for server to be available" component=cert

time="2016-07-23T14:37:02Z" level=info msg="Can not launch agent right now: Server not available at http://172.17.0.1:18080/ping:" component=service

There are many reasons behind this problem. I read some issues on Github and various forum posts to help you sort out some of the root causes of this problem.

Network settings problem

Sometimes the container automatically obtains the wrong IP of the node. At this time, you need to force the correct IP to be specified.

ZooKeeper did not start normally

Zookeeper containers are distributed on each node. If a problem between nodes causes Zookeeper containers to fail to communicate, it will cause this problem. If you want to confirm this problem, you can refer to this log output.

There are residual files in the directory /var/lib/rancher/state

If you run the Rancher-ha.sh script multiple times, you need to clean up the residual files in this directory before running.

HA state is destroyed after many attempts

Delete the library and try again, and restart Dafa.

Insufficient machine resources

At least 4GB of memory is required. In addition, the number of MySQL connections must be sufficient, which can be calculated based on the 50 connections required for each HA node. If you see the error below, then the problem is sure.

The rancher/server version does not match

When rancher-ha.sh is executed, the default is to download the rancher/server:latest image. If you do not have a ready-to-use image on the host, it may cause unexpected problems due to versioning. Therefore, it is the best practice to specify the version number when executing. for example:

./rancher-ha.sh rancher/server:

docker0 returned the wrong IP

This error is specifically that the agent's health status will be checked during the HA installation process. At this time, it obtained an incorrect docker0 IP address because I had previously set it to 172.17.42.1.

curl localhost:18080/ping

pong

curl http://172.17.0.1:18080/ping

curl: (7) Failed to connect to 172.17.0.1 port 18080: Connection refused

My solution is to reinstall my AWS virtual machine, which caused the error to get the docker0 IP. I feel that I may have installed Docker in the virtual machine multiple times. After reinstalling, everything was normal, and I also saw the expected log information.

After HA is deployed

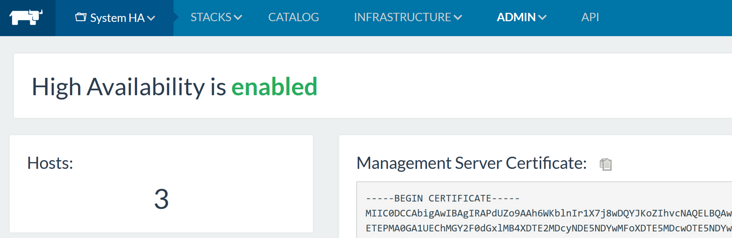

We finally saw the correct log output that we dreamed of and the beautiful UI display like this:

time="2016-07-24T20:00:11Z" level=info msg="[0/10] [zookeeper]: Starting "

time="2016-07-24T20:00:12Z" level=info msg="[1/10] [zookeeper]: Started "

time="2016-07-24T20:00:12Z" level=info msg="[1/10] [tunnel]: Starting "

time="2016-07-24T20:00:13Z" level=info msg="[2/10] [tunnel]: Started "

time="2016-07-24T20:00:13Z" level=info msg="[2/10] [redis]: Starting "

time="2016-07-24T20:00:14Z" level=info msg="[3/10] [redis]: Started "

time="2016-07-24T20:00:14Z" level=info msg="[3/10] [cattle]: Starting "

time="2016-07-24T20:00:15Z" level=info msg="[4/10] [cattle]: Started "

time="2016-07-24T20:00:15Z" level=info msg="[4/10] [go-machine-service]: Starting "

time="2016-07-24T20:00:15Z" level=info msg="[4/10] [websocket-proxy]: Starting "

time="2016-07-24T20:00:15Z" level=info msg="[4/10] [rancher-compose-executor]: Starting "

time="2016-07-24T20:00:15Z" level=info msg="[4/10] [websocket-proxy-ssl]: Starting "

time="2016-07-24T20:00:16Z" level=info msg="[5/10] [websocket-proxy]: Started "

time="2016-07-24T20:00:16Z" level=info msg="[5/10] [load-balancer]: Starting "

time="2016-07-24T20:00:16Z" level=info msg="[6/10] [rancher-compose-executor]: Started "

time="2016-07-24T20:00:16Z" level=info msg="[7/10] [go-machine-service]: Started "

time="2016-07-24T20:00:16Z" level=info msg="[8/10] [websocket-proxy-ssl]: Started "

time="2016-07-24T20:00:16Z" level=info msg="[8/10] [load-balancer-swarm]: Starting "

time="2016-07-24T20:00:17Z" level=info msg="[9/10] [load-balancer-swarm]: Started "

time="2016-07-24T20:00:18Z" level=info msg="[10/10] [load-balancer]: Started "

time="2016-07-24T20:00:18Z" level=info msg="Done launching management stack" component=service

time="2016-07-24T20:00:18Z" level=info msg="You can access the site at https://" component=service

If a self-signed certificate is used and the front end wants to access it via HTTPS, then you need to add your certificate to your trusted certificate. Then wait for the adjustment of the database resource constraints, the services of the three nodes are fully operational.

If a self-signed certificate is used and the front end wants to access it via HTTPS, then you need to add your certificate to your trusted certificate. Then wait for the adjustment of the database resource constraints, the services of the three nodes are fully operational.

Summary

This is much more than originally thought. This is why distributed systems have always been the research area for Ph.D. studies. After solving all the points of failure, I think Rancher HA has started to move towards the top distributed system. I will eventually configure the Rancher HA script as a simple task for Ansible, so stay tuned!

Appendix

For any distributed system, the basic routine is to uniformly manage the state and changes generated in it, which means that multiple services need a process to coordinate updates. Rancher's approach is to put all the states in the database, but the state information in the event when the state needs to be synchronized, and send the event to synchronize the state to other components. So when an event is being processed, it has a lock, and the state in the database cannot be modified before the event is processed. In a single server setup, all coordination occurs in memory on the host. Once you are involved in multi-server HA configuration, things like zookeeper and Redis are necessary.