In virtual paging storage, the virtual storage space and physical main storage space are divided into pages of fixed size, and the memory allocation for threads is also in units of pages. For example, if the page size is 4K, 4GB/4KB=1M records are needed for 4GB storage space, that is, there are more than 1 million 4KB pages. We can treat each other. If the page is too small, a large number of page table entries will be generated, which reduces the query speed and wastes the main memory space for storing the page; but if the page is too large, it will easily cause waste. The reason is the same as the segmented storage management method. Therefore, the default page size of the Linux operating system is 4KB, which can be viewed through the instruction:

$ getconf PAGE_SIZE

4096

But in some scenes with stringent performance requirements, the page will be set very large, such as 1GB, or even tens of GB, these pages are called “Huge Page” (Huge Page). The main reasons why large pages can improve performance are the following:

- Reduce page table entries and speed up retrieval.

- To improve the hit rate of the TLB fast table, the TLB generally has between 16 and 128 entries, which means that when the large page is 1GB, the TLB can correspond to the storage space between 16GB and 128GB.

It is worth noting that the main memory-swap page swap (Swap) is generally prohibited when first using large pages. The reason is the same as the segmented storage management method. Large-capacity swapping will make auxiliary memory read and write become the bottleneck of CPU processing. Although this problem has been alleviated in the flashing environment of data centers today, the price is expensive SSD storage devices. Another is that large pages will also slow down the address retrieval within the page, so it is not that the larger the page capacity, the better, but that it requires a lot of testing of the application to obtain the peak curve of page capacity and performance.

Advantages of enabling HugePage

- No swap is required, and there is no problem with pages being swapped in and out due to insufficient memory space.

- Alleviating the pressure of TLB Cache, that is, reducing the address mapping pressure that CPU Cache can cache.

- Reduce the load on the Page Table.

- Eliminate Page Table to find the load.

- Improve overall memory performance.

Disadvantages of enabling HugePage

- HugePages will directly allocate and reserve the memory area of the corresponding size when the system starts.

- HugePages will not be released and changed without the intervention of the administrator after booting.

Hugepages memory for Linux

In Linux, physical memory is managed in units of pages. By default, the page size is 4KB. 1MB of memory can be divided into 256 pages; 1GB is equivalent to 256000 pages. The CPU has a built-in memory management unit (MMU) for storing a list of these pages (page table), each page has a corresponding entry address. The 4KB page was reasonable when the “paging mechanism” was proposed because the memory size at that time was only a few tens of megabytes. However, the physical memory capacity of the current computer has grown to GB or even TB level. If the operating system still uses 4KB as the basic unit of pages, the MMU page space in the CPU will not be enough to store all address entries, which will cause memory Waste.

At the same time, when running applications with large memory requirements on the Linux operating system, the default 4KB page will generate more TLB Miss and page fault interrupts, which significantly affects the performance of the application. When the operating system uses 2MB or more as the unit of paging, it will substantially reduce the number of TLB Miss, and page fault interrupts, considerably improving the performance of the application.

The concept of Huge pages has been introduced to solve the above problems, since Linux Kernel 2.6. The purpose is to replace the traditional 4KB memory pages by using large page memory to adapt to the larger and larger memory space. Huge pages are available in 2MB and 1GB specifications. The 2MB size (default) is suitable for GB-level memory, while the 1GB size is suitable for TB-level memory.

The implementation principle of huge pages

To achieve huge page support at a minimal cost, Linux uses the concepts of hugetlb and hugetlbfs. Among them, hubetlb is an entry recorded in the TLB and points to hugepages, and hugetlbfs is a particular file system (virtually a memory file system). The primary function of hugetlbfs is to enable applications to flexibly choose the size of virtual memory pages according to their needs, without forcing the use of pages of a specific size globally. In TLB, hugetlb is used to point to hugepages, and hugepages can be called through hugetlb entries. These allocated hugepages are then provided to the process in the form of hugetlbfs memory file system.

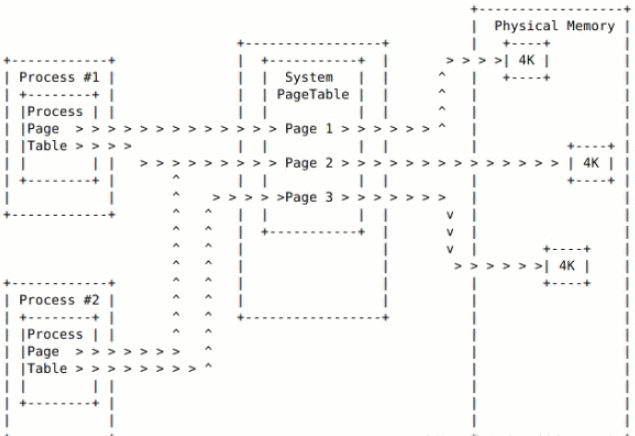

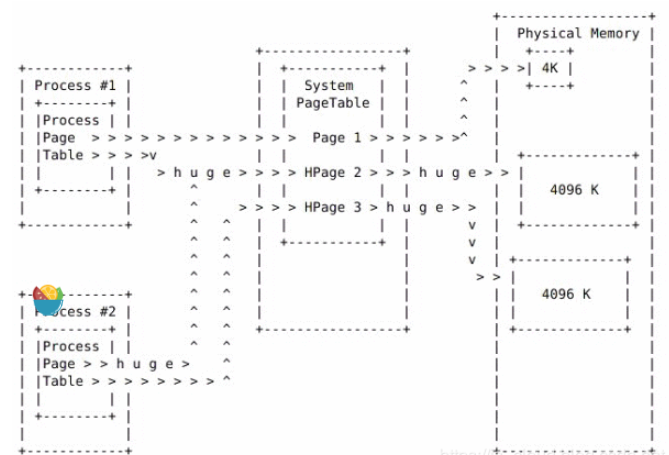

Regular Page allocation: When a process requests memory, it needs to access PageTable to call an actual physical memory address, and then obtain memory space.

Huge Page allocation: After the system configures Huge pages, the process still obtains the actual physical memory address through the ordinary PageTable. But the difference is that the Hugepage (HPage) attribute has been added to Process PageTable and System PageTable.

It can be seen that when a process needs to use Huge pages, it only needs to declare the Hugepage attribute and let the system allocate its entry in the PageTable. So, Regular page and Huge page share a PageTable, which is called to support Huge pages with minimal code.

The benefits of using Huge pages are apparent. Assuming that the application requires 2MB of memory, if the operating system uses 4KB as the unit of paging, then 512 pages are needed, which in turn requires 512 entries in the TLB and 512 pages. Table entry, the operating system needs to experience at least 512 TLB Miss and 512-page fault interruptions to map all 2MB application space to physical memory; however, when the operating system uses 2MB as the basic unit of paging, only one TLB Miss and Once a page fault is interrupted, a virtual-real mapping can be established for the 2MB application space. There is no need to experience TLB Miss and page fault during the running process (assuming that TLB item replacement and swap have not occurred).

Besides, the use of Huge pages can also reduce the time for system management and processor access to memory pages (expanding the memory address range of TLB fast page table queries), and the Swap (memory swap) daemon in the Linux kernel will not manage large page occupation. This part of the space. Properly setting large pages can reduce the burden of memory operations and reduce the possibility of performance bottlenecks caused by accessing page tables, thereby improving system performance. Therefore, if your system often encounters performance problems caused by Swap, or your computer’s memory space is very large, you can consider enabling large page memory.

Configure HugePages memory on Linux

HugePage memory configuration requires continuous memory space, so allocation at power-on is the most reliable setting method. The parameters for configuring large pages are:

- hugepages: The number of huge permanent pages allocated at boot time is defined in the kernel. The default is 0, which means no allocation. Only when the system has enough continuously available pages, the allocation will succeed. The pages reserved by this parameter cannot be used for other purposes.

- hugepagesz: The size of the huge pages allocated at boot time is defined in the kernel. Available values are 2MB and 1GB. The default is 2MB.

- default_hugepagesz: The default size of large pages allocated at power-on is defined in the kernel.

Step 1. Check whether the Linux operating system has huge page memory enabled. If HugePages_Total is 0, it means that Linux has not set or enabled Huge pages.

$ grep -i HugePages_Total /proc/meminfo

HugePages_Total: 0

Step 2. Check if hugetlbfs is mounted

$ mount | grep hugetlbfs

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime)

Step 3. If it is not mounted, mount it manually

$ mkdir /mnt/huge_1GB

$ mount -t hugetlbfs nodev /mnt/huge_1GB

$ vim /etc/fstab

nodev /mnt/huge_1GB hugetlbfs pagesize=1GB 0 0

Step 4. Modify grub2, for example, configure 10 large pages of 1GB for the system (for example)

$ vim /etc/grub2.cfg

# move to linux16 /vmlinuz-3.10.0-327.el7.x86_64 and add the following line

default_hugepagesz=1G hugepagesz=1G hugepages=10

NOTE: After configuring large pages, the system will first try to find and reserve a contiguous size of hugepages * hugepagesz in the memory when booting. If the memory space is not enough, the startup will report Kernel Panic, Out of Memory, and other errors.

Step 5. Reboot and view more detailed hugepages memory information

$ cat /proc/meminfo | grep -i Huge

AnonHugePages: 1433600 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 1048576 kB

If the large page size is the same, you can calculate the size of the memory space occupied by the large page through Hugepagesize and HugePages_Total in /proc/meminfo. This part of the space will be counted in the used memory space, even if it has not been actually used. Therefore, users may observe the following phenomenon: use the free command to check that the used memory is large, but the total usage of %mem in top or ps is very small.